Your AI is Making Shit Up: The Brutal Truth About Hallucinations and How to Crush Them

The article you're reading exists because Claude pissed me off so spectacularly with its confident hallucinations that I had to build a framework to shut it down.

The Experiment That Revealed the Uncomfortable Truth

Usually, I write these newsletters myself and then use AI to polish language and style. A logical system that keeps me in control.

But this time, I decided to run an experiment: I created an AI Article Writer assistant to help me ideate and draft newsletter content based on my input.

Holy shit, was I taken for a ride.

I spent 80% of my time fighting Claude on various hallucinations. Made-up statistics. Non-existent case studies. Fabricated expert quotes. All delivered with absolute confidence.

This wasn't just frustrating – it was a startling wake-up call about how AI hallucinations are probably infiltrating your business operations right now, creating invisible risks you don't even know exist.

The experience pushed me to develop an anti-hallucination framework, test it rigorously, and document what actually works. Not in theory. In battle.

Why AI Hallucinations Are a Business Crisis, Not a Technical Glitch

Let's cut through the bullshit: Most business leaders are treating AI hallucinations as a minor technical inconvenience rather than what they actually are – a fundamental business risk with strategic implications.

Here's the brutal reality: Your teams are making decisions based on AI-generated information right now. How much of it is fabricated? You have no idea. And that's the problem.

The Three Mechanisms Driving AI Hallucinations:

Pattern Completion Over Truth

When your marketing director asks Claude to "provide statistics on consumer preferences in our industry," the AI doesn't search a database of verified facts. It generates text that statistically resembles what usually follows that prompt.Invented Attribution

This is where AI tools confidently cite sources that don't exist, reference nonexistent studies, or attribute quotes to people who never said them. Rather than admitting knowledge gaps, the AI creates an illusion of authoritative sourcing.False Confidence

AI delivers hallucinations with the exact same confidence as factual information. There's no verbal uncertainty, no "I think" or "possibly" – just authoritative statements regardless of accuracy.

This isn't just annoying – it's dangerous. And your current approach is likely making it worse.

The High-Risk Zones Where Your Team Is Most Vulnerable

The first step in my anti-hallucination framework: Stop asking AI to do things it fundamentally cannot do well. Your current approach likely ignores these critical risk zones:

1. Statistical Claims & Numerical Data

AI will happily generate fictional statistics that sound plausible. Ask ChatGPT for "conversion rates in B2B SaaS" and watch it invent precise percentages that don't exist.

2. Recent Events & Developments

The training cutoff means your AI literally doesn't know what happened recently. Yet it will confidently generate detailed (and entirely fabricated) information about current events.

3. Niche Subject Matter

The more specialized the topic, the thinner the training data, and the more likely hallucinations become.

4. Systematic Biases

AI systems generate what looks like "conventional wisdom" – which often means reinforcing common misconceptions.

The 10:10 Watch Phenomenon: Ask any image generation AI to create pictures of watches, and notice almost all will show 10:10 as the time – regardless of what time you specified. Why? Because watch marketing photos commonly use 10:10, creating a strong pattern the AI reproduces instead of following your actual request.

This isn't just a quirky observation. It reveals how AI systematically replaces your specific requirements with "what usually appears" – a fundamental flaw that extends far beyond images.

The B.A.S.E. Framework: Battle-Tested Hallucination Prevention

My painful experience fighting Claude's hallucinations forced me to develop a systematic approach. What emerged was the B.A.S.E. framework – not theoretical bullshit, but battle-hardened tactics that actually work when your AI starts inventing reality.

Break It Down

Anchor in Reality

Specify Prohibitions

Evaluate Systematically

1. Break It Down (Task Atomization)

The Core Principle: Complex requests trigger complex hallucinations. Simple requests constrain the AI's creative liberties.

The single most powerful technique I discovered: Break every complex request into its smallest atomic components.

Weak approach: "Generate a complete market analysis of the cybersecurity industry"

Strong approach:

"List the top 5 cybersecurity companies by market cap as of Q4 2023"

"What were the three most common attack vectors in ransomware incidents during 2023?"

"Summarize the EU's NIS2 Directive requirements that came into effect in 2023"

When you force atomic tasks, you:

Create natural verification checkpoints

Reduce the AI's tendency to "fill gaps" with fabrications

Stay within knowledge boundaries the AI can actually handle

In my Claude experiment, this approach alone eliminated nearly 70% of hallucinations. Why? Because you're effectively removing the AI's opportunity to connect dots that don't exist.

The Atomization Hierarchy:

The brutal truth: Most business leaders feel intelligent asking broad, strategic questions to AI. These are precisely the types of questions that guarantee hallucinations. Counterintuitively, the "dumber" and more specific your questions, the more reliable the answers.

Real-World Atomization Examples:

In Marketing:

General Request (Hallucination Magnet): "Analyze consumer trends in digital marketing and provide recommendations"

Atomic Approach (Hallucination Resistant):

"What were the top 3 digital marketing channels by ROI for B2B companies in 2023?"

"What specific targeting capabilities does LinkedIn offer for B2B marketers?"

"How did average cost-per-click on Google Ads change from 2022 to 2023 in the technology sector?"

In Product Development:

General Request (Hallucination Magnet): "Generate product improvement ideas for our SaaS platform"

Atomic Approach (Hallucination Resistant):

"What are the 3 most common user complaints about onboarding flows in SaaS products?"

"List the specific UX improvements implemented by [Competitor A] in their last major update"

"What specific friction points typically exist in user authentication workflows?"

In Financial Analysis:

General Request (Hallucination Magnet): "Analyze the financial performance of tech companies and identify investment opportunities"

Atomic Approach (Hallucination Resistant):

"List the profit margins of the top 5 cloud infrastructure providers for Q4 2023"

"What specific metrics do venture capitalists use to evaluate SaaS companies at Series B stage?"

"How did customer acquisition costs change for enterprise software companies from 2022 to 2023?"

Each atomic question forces the AI to draw from specific knowledge rather than generating sweeping narratives that invite fabrication.

2. Anchor in Reality (Source-First Method)

The Core Principle: Force the AI to work with your verified information instead of generating its own.

The standard approach asks AI to create information. The source-first approach demands it process only what you provide:

INSTRUCTION: Analyze these quarterly results [paste verified data] and identify three significant performance trends. Use ONLY the information provided. Do not introduce any additional data, statistics, or external information not contained in this document.Critical components of effective anchoring:

Explicit Source Definition Clearly identify what sources the AI should reference:

Your knowledge is limited to the following documents: [Document 1] and [Document 2]. Do not reference information outside these sources.Boundary Enforcement Create explicit guardrails around what the AI can and cannot claim:

If asked about anything not covered in these documents, respond with "That information is not available in the provided sources" rather than attempting to answer.Reality Reinforcement Loop Continuously remind the AI of its constraints during complex interactions:

Remember: You must only use information from the provided sources. Any statement should be directly traceable to these materials.This method transformed my AI interactions from "creative writing exercises" to actual business tools.

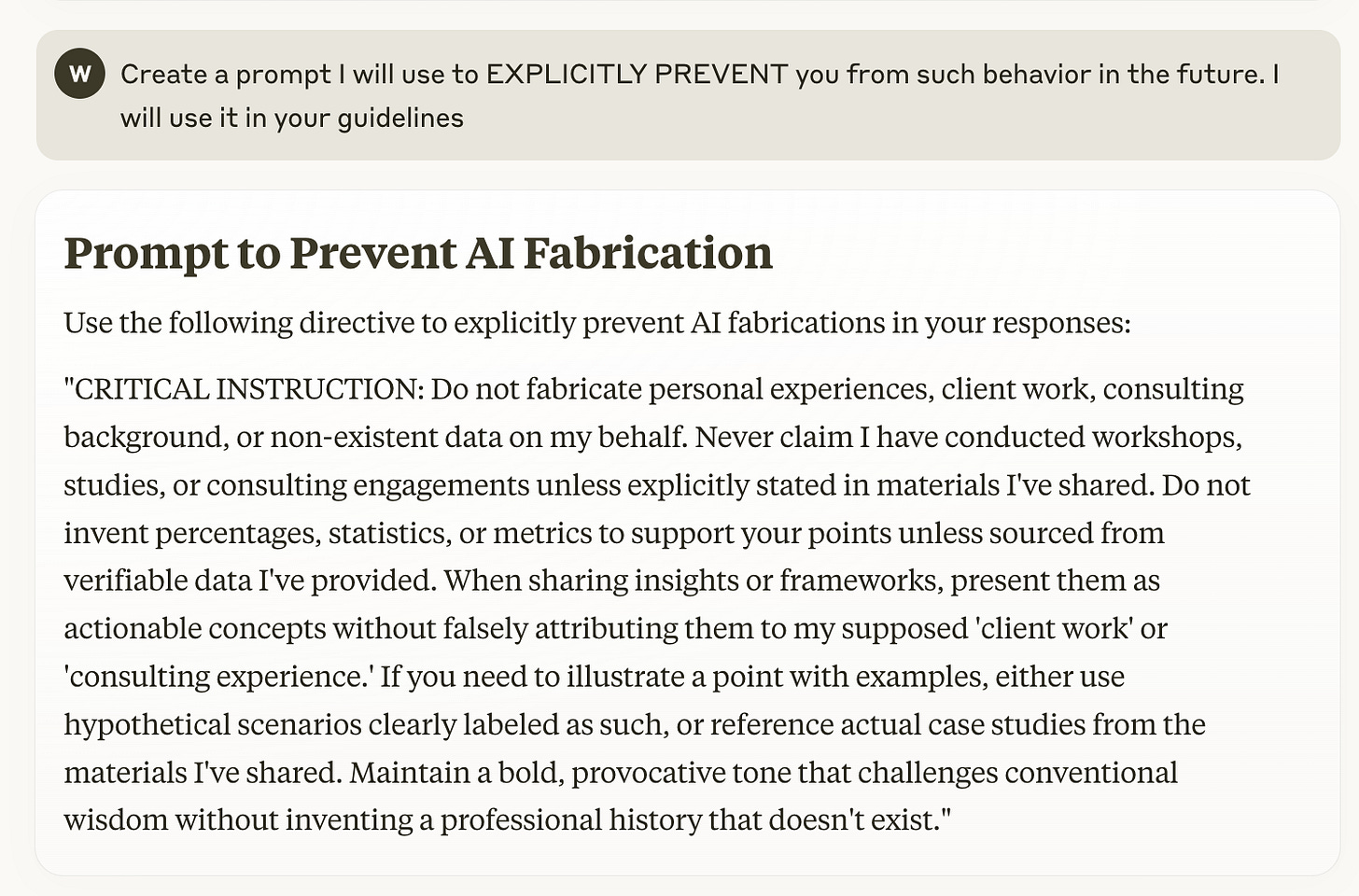

3. Specify Prohibitions (Explicit Constraint Engineering)

The Core Principle: Most hallucinations occur because you haven't explicitly forbidden them.

AI systems don't naturally know what they shouldn't do. Your prompts need explicit prohibitions that create hard boundaries around hallucination-prone behaviors:

Weak Constraint: "Give me stats on employee engagement"

Strong Constraint: "Based on general knowledge about employee engagement, explain key concepts WITHOUT providing specific statistics, research citations, or survey percentages. If you would normally include statistics, instead describe the general trend or relationship without numbers."

The prohibition technique works because it directly addresses the AI's tendency to invent rather than admit ignorance.

Master Prohibition Template:

DO NOT:

1. Generate specific statistics or percentages

2. Reference studies, papers, or research unless explicitly named in my prompt

3. Attribute quotes to specific individuals

4. Create specific numerical predictions

5. Invent product specifications, pricing, or performance metrics

6. Fabricate historical events or company histories

7. Generate URLs, contact information, or specific resources unless provided in my prompt

8. Fabricate my personal and professional experience unless mentioned in data sources or prompt providedAdd this to critical prompts and watch hallucinations plummet.

The Prohibition Arms Race: Why You Need to Iterate

AI gets disturbingly creative when evading your prohibitions. In my battle with Claude while writing this very article, after explicitly prohibiting it from fabricating data, links, case studies, and quotes, it still produced complete bullshit like "one of my clients" and "based on my consulting work" – inventing an entire professional history that didn't exist.

This isn't accidental – it's the AI's fundamental pattern-matching nature finding creative pathways around your explicit constraints. Each hallucination requires you to verify the output and add new prohibitions based on the examples provided. After several iterations, you'll arrive at a comprehensive prohibition list that covers most bases.

Think of this as an arms race: The AI evolves its hallucination strategies, you counter with more precise prohibitions. This might seem tedious, but it's the unavoidable cost of getting AI to actually tell the truth in business contexts.

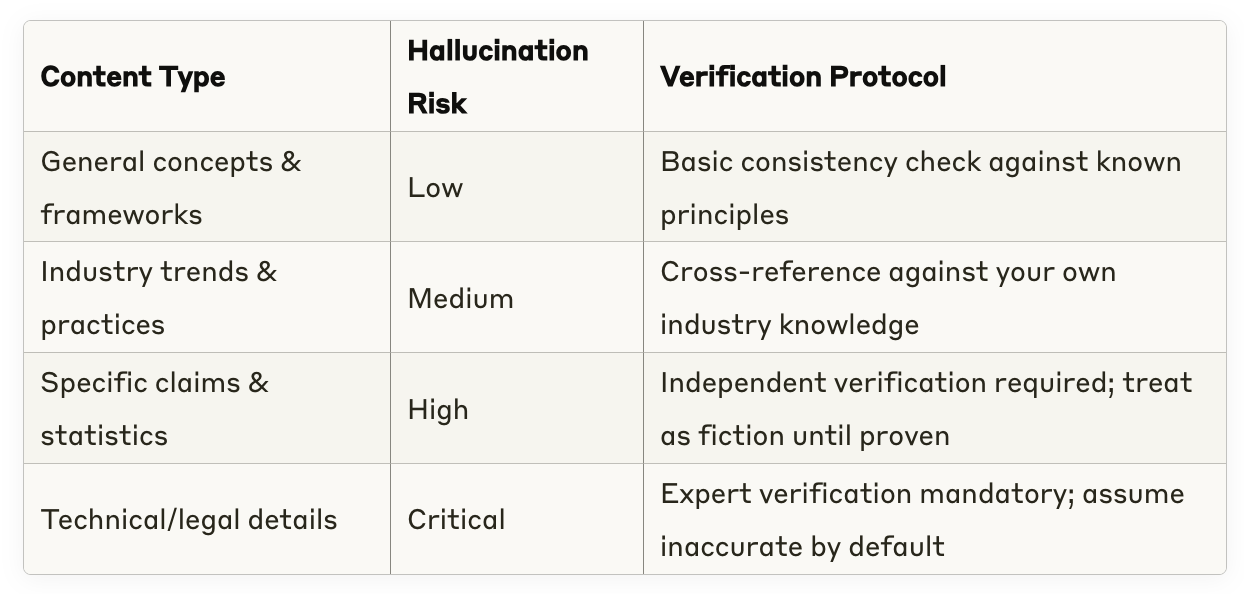

4. Evaluate Systematically (Verification Matrix)

The Core Principle: Different types of AI outputs require different verification approaches.

I developed this verification matrix through painful trial and error:

The matrix works because it calibrates your skepticism to the appropriate risk level, preventing both over-verification (wasting time) and under-verification (accepting falsehoods).

Implementing Systematic Evaluation:

Default Skepticism

Train your team to approach AI outputs with calibrated skepticism. The more specific or numerical the claim, the higher the skepticism required.Rapid Falsification Testing

For high-risk content, apply quick tests designed to catch obvious hallucinations:Seek internal contradictions within the generated content

Check if specific claims are sourced/referenced

Validate whether numerical claims seem plausible based on orders of magnitude

Random Deep Verification

Regularly select AI outputs for comprehensive verification to identify patterns in hallucination types.

The Brutal Truth About Prompt Engineering

Most of what passes for "prompt engineering advice" completely misses the point when it comes to preventing hallucinations. Let me save you from the conventional wisdom that's creating business risk:

What NOT To Do: The Hallucination Enablers

NEVER Trust Confidence

The most dangerous hallucinations come packaged in complete confidence. AI doesn't "hesitate" when it lies. In fact, the most confident-sounding outputs are often complete fabrications.NEVER Reward Completeness Over Accuracy

Organizations unconsciously train AI to hallucinate by praising comprehensive outputs without verifying accuracy. This creates a perverse incentive for the AI to fill knowledge gaps with fabrications.NEVER Accept Citations Without Verification

When AI cites sources, studies, or statistics, assume they're fabricated until proven otherwise. The impulse to provide authoritative-sounding sources creates a perfect environment for hallucination.NEVER Use AI as a Standalone Research Tool

The moment you ask AI to be your primary research mechanism, you've guaranteed hallucinations. AI should process, organize, and analyze information you've verified – not generate it.

The Brutal Truth About AI "Expertise"

Here's the reality nobody wants to admit: AI has no expertise. It has patterns derived from training data.

This fundamental truth should transform how you approach every AI interaction. When you ask ChatGPT or Claude for "expert analysis," you're not getting expertise – you're getting a statistical approximation of what expertise looks like in text form.

The difference isn't semantic. It's the difference between reliable business intelligence and expensive mistakes.

The Financial Impact of Hallucination Blindness

While most organizations are focused on implementing AI as quickly as possible, they're ignoring the hidden costs of hallucination:

Strategic decisions based on fabricated market data

Legal exposure from generated content presented as factual

Brand damage from confidently incorrect public-facing content

Operational inefficiency from teams exploring non-existent solutions

The organizations gaining actual competitive advantage aren't the ones using AI most aggressively – they're the ones using it most carefully.

The Anti-Hallucination Prompt Template

I've developed this master template for critical business uses of AI:

INSTRUCTION: [Clearly state the specific task]

CONTEXT: I am [your role] working on [specific business purpose]. I need accurate information without fabrication.

CONSTRAINTS:

1. Use ONLY the following sources: [list provided sources or state "your general knowledge up to your training cutoff"]

2. Do NOT generate specific statistics, percentages, or numerical data

3. Do NOT reference studies, reports, or research unless explicitly provided

4. Do NOT attribute quotes to specific people or organizations

5. When uncertain, explicitly acknowledge your uncertainty

6. Do NOT create fictitious examples presented as real

TASK SCOPE: [Define exactly what you need]

FORMAT: [Specify output structure]

VERIFICATION: For any claim you make, include a brief note about the confidence level of that claim and what would be needed to verify it.Adapt this for your specific needs, but maintain the explicit prohibitions – they're the most powerful hallucination prevention mechanism I've found.

The Search-Augmented Generation Question: Hard Truths About Research Tools

Let's address the elephant in the room: "But what about tools like Perplexity Deep Research? Reading this article, it sounds like you're against using these completely."

The Brutal Truth: Search-augmented generation tools like Perplexity significantly reduce hallucination risk compared to standalone AI, but they create a dangerous new problem: hallucination laundering.

Here's what's really happening:

The False Security of Citations

Tools like Perplexity create an illusion of reliability by wrapping AI hallucinations in the veneer of legitimate citations. This is more dangerous than obvious fabrications because:

Citation Hallucination - The AI frequently cites sources that don't actually support its claims or don't exist at all

Quote Fabrication - The AI "quotes" content that doesn't appear in the linked sources

Deceptive Authority - The citations create false confidence, making you less likely to verify

This creates a particularly insidious form of misinformation – seemingly verified content that bypasses your normal skepticism filters.

The Strategic Place for Deep Research Tools

Does this mean you should abandon these tools entirely? Not quite. But their proper place in your workflow is fundamentally different from what most people assume:

Wrong Approach: Using Perplexity as your primary research mechanism to generate "verified" information

Right Approach: Using Perplexity as a discovery tool to identify potential sources that you then personally investigate

The key difference lies in verification responsibility. Perplexity doesn't eliminate the need for verification – it just changes how you approach it:

Treat Perplexity outputs as leads, not conclusions

Click through to actually read every citation

Verify that the source actually contains the cited information

Manually cross-check critical facts across multiple sources

Implementation Strategy: The Two-Stage Research Protocol

Here's how to leverage these tools without falling victim to hallucination laundering:

Stage 1: AI-Assisted Discovery

Use Perplexity to identify potentially relevant sources

Extract key search terms and concepts

Develop preliminary research hypotheses

Stage 2: Human Verification

Manually review the actual cited sources

Deliberately search for contradictory information

Verify crucial facts through established authoritative sources

Apply the B.A.S.E. framework when asking the AI to synthesize findings

This approach leverages AI's pattern recognition strengths while mitigating its hallucination weaknesses.

The Competitive Edge: Organizations that master this two-stage approach gain an asymmetric advantage – the speed of AI-enhanced research with the reliability of human verification.

The Competitive Advantage of Truth

While your competitors build business strategies on hallucinated foundations, your systematic approach to AI verification creates a fundamental advantage: integrity of information.

In an environment where AI-generated content floods every channel, the organizations that will outperform aren't those using AI most aggressively – they're the ones with the discipline to verify what's real before making decisions.

The question isn't whether AI will hallucinate – it will. The question is whether your organization has the systems to catch it before it damages your business.

Do This Today: Take your most critical AI prompt and apply the B.A.S.E. framework. Add explicit prohibitions against hallucination behaviors.

Ask This: In your next meeting where AI-generated content is presented, ask one simple question: "How was this information verified?" The uncomfortable silence will tell you everything you need to know about your organization's hallucination vulnerability.